RAG - retrieval-augmented generation

Presentation - Empowering GenAI with RAG

RAG is an AI framework for retrieving facts from an external knowledge base to ground large language models (LLMs) on the most accurate, up-to-date information and to give users insight into LLMs' generative process.

- RAG combines retrieval and generation processes to enhance the capabilities of LLMs

- In RAG, the model retrieves relevant information from a knowledge base or external sources

- This retrieved information is then used in conjunction with the model's internal knowledge to generate coherent and contextually relevant responses

- RAG enables LLMs to produce higher-quality and more context-aware outputs compared to traditional generation methods

- Essentially, RAG empowers LLMs to leverage external knowledge for improved performance in various natural language processing tasks

Why is Retrieval-Augmented Generation important

- You can think of the LLM as an over-enthusiastic new employee who refuses to stay informed with current events but will always answer every question with absolute confidence.

- Unfortunately, such an attitude can negatively impact user trust and is not something you want your chatbots to emulate!

- RAG is one approach to solving some of these challenges. It redirects the LLM to retrieve relevant information from authortative, pre-determined knowledge sources.

- Organizations have greater control over the generated text output, and users gain insights into how the ML generates the response.

Codes

- generative-ai/gemini/use-cases/retrieval-augmented-generation/multimodal_rag_langchain.ipynb at main · GoogleCloudPlatform/generative-ai · GitHub

- GitHub - Farzad-R/Advanced-QA-and-RAG-Series: This repository contains advanced LLM-based chatbots for Q&A using LLM agents, and Retrieval Augmented Generation (RAG) and with different databases. (VectorDB, GraphDB, SQLite, CSV, XLSX, etc.)

- example-app-langchain-rag/rag_chain.py at main · streamlit/example-app-langchain-rag · GitHub

- GitHub - langchain-ai/rag-from-scratch

- generative-ai/gemini/qa-ops/building_DIY_multimodal_qa_system_with_mRAG.ipynb at main · GoogleCloudPlatform/generative-ai · GitHub

- Building A RAG System with Gemma, MongoDB and Open Source Models - Hugging Face Open-Source AI Cookbook

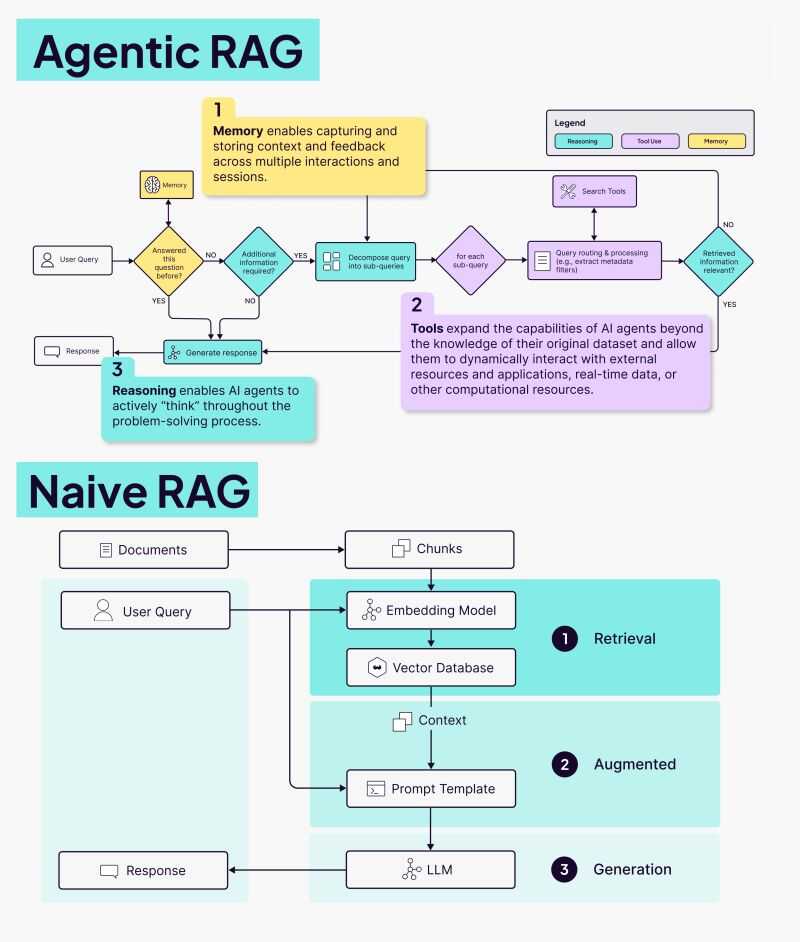

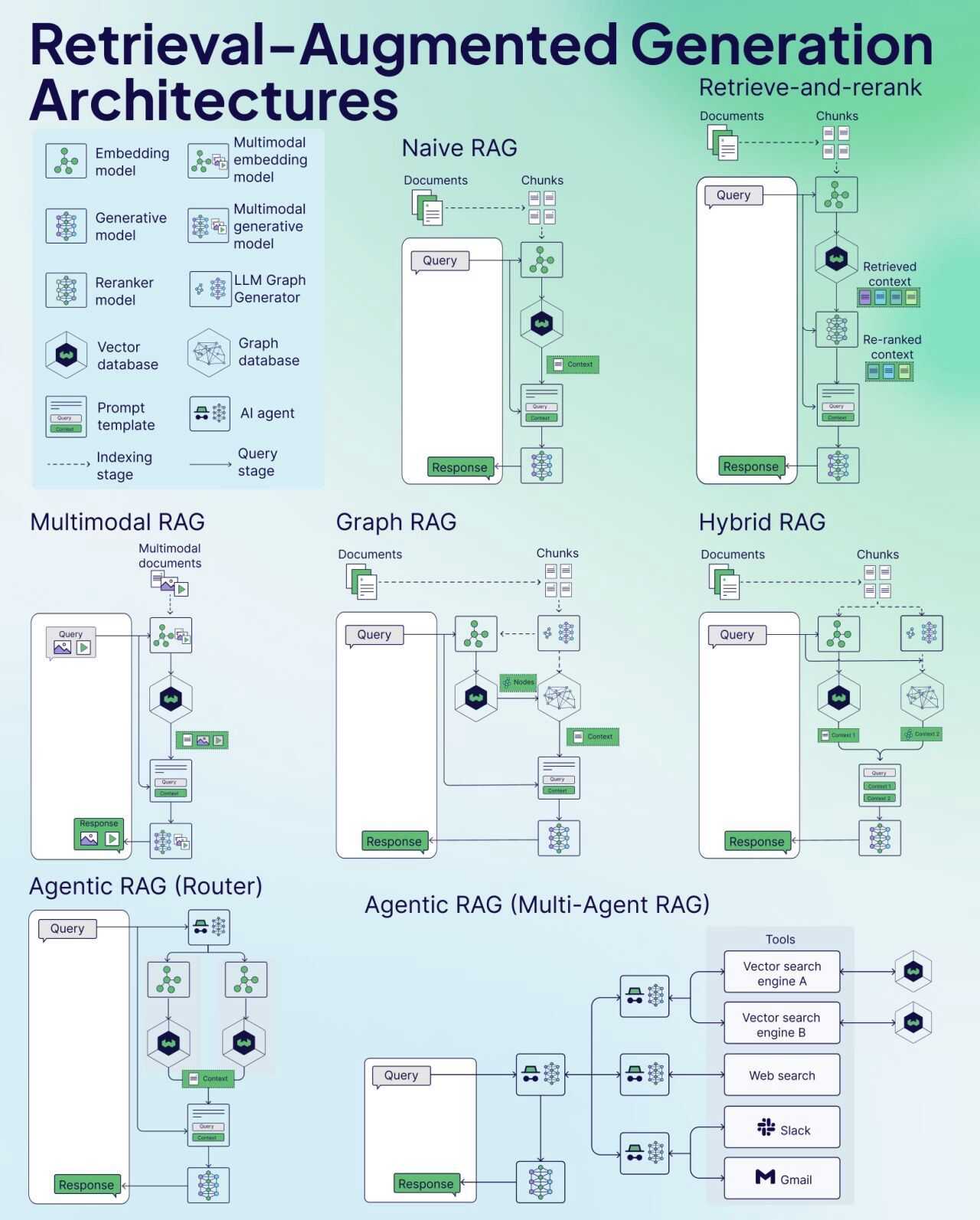

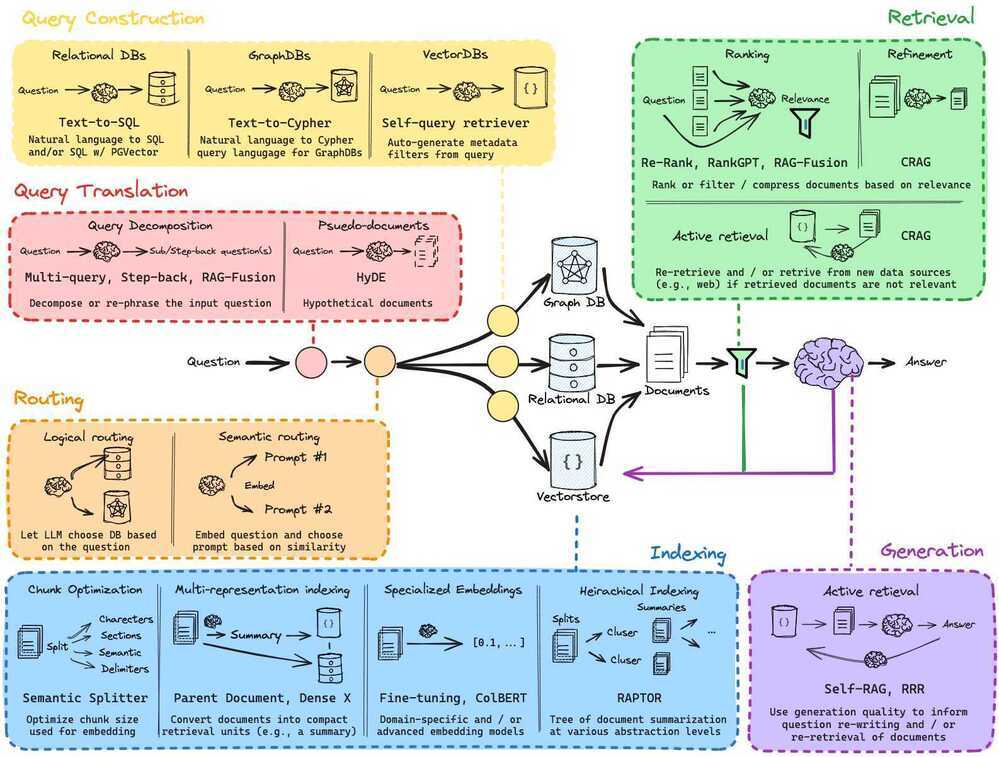

Types of RAG

Advanced

Stop Saying RAG Is Dead – Hamel’s Blog

Advanced RAG Techniques

- Query Expansion (with multiple queries)

- GitHub - pdichone/advanced-rag-techniques

- Downsides

- Lots of results

- queries might not always be relevant or useful

- Results not always relevant and or useful

- Lots of results

Advanced RAG Techniques: Unlocking the Next Level | by Tarun Singh | Medium

RIG - Retrieval Interleaved Generation - DataGemma through RIG and RAG - by Bugra Akyildiz

Contextual Retrieval

Contextual Retrieval (introduced by Anthropic1) addresses a common issue in traditional Retrieval-Augmented Generation (RAG) systems: individual text chunks often lack enough context for accurate retrieval and understanding.

Contextual Retrieval enhances each chunk by adding specific, explanatory context before embedding or indexing it. This preserves the relationship between the chunk and its broader document, significantly improving the system's ability to retrieve and use the most relevant information.

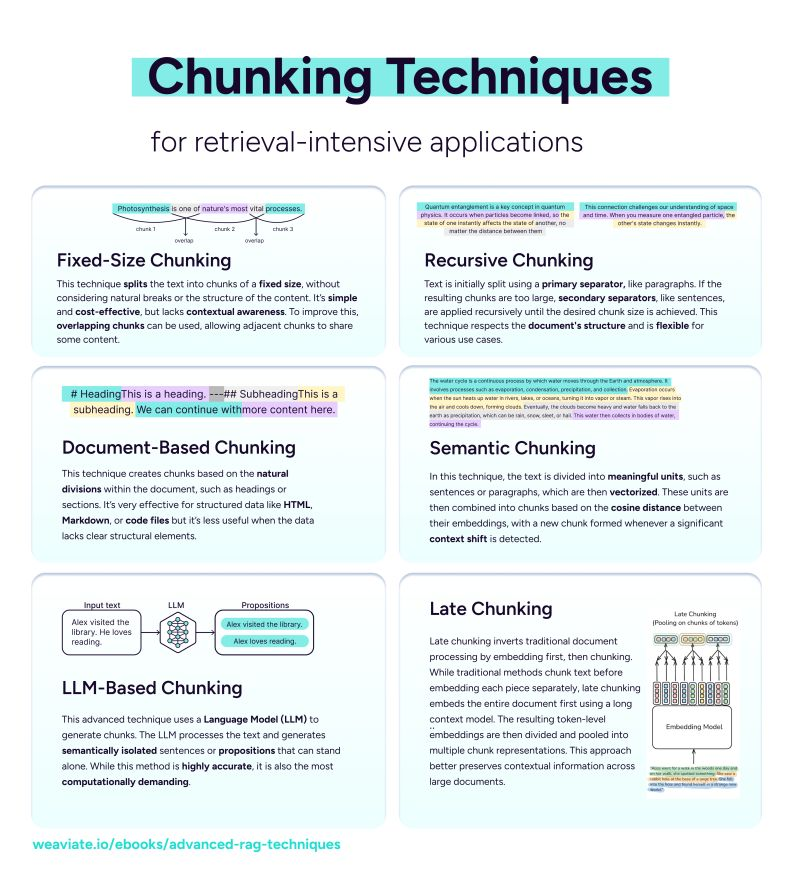

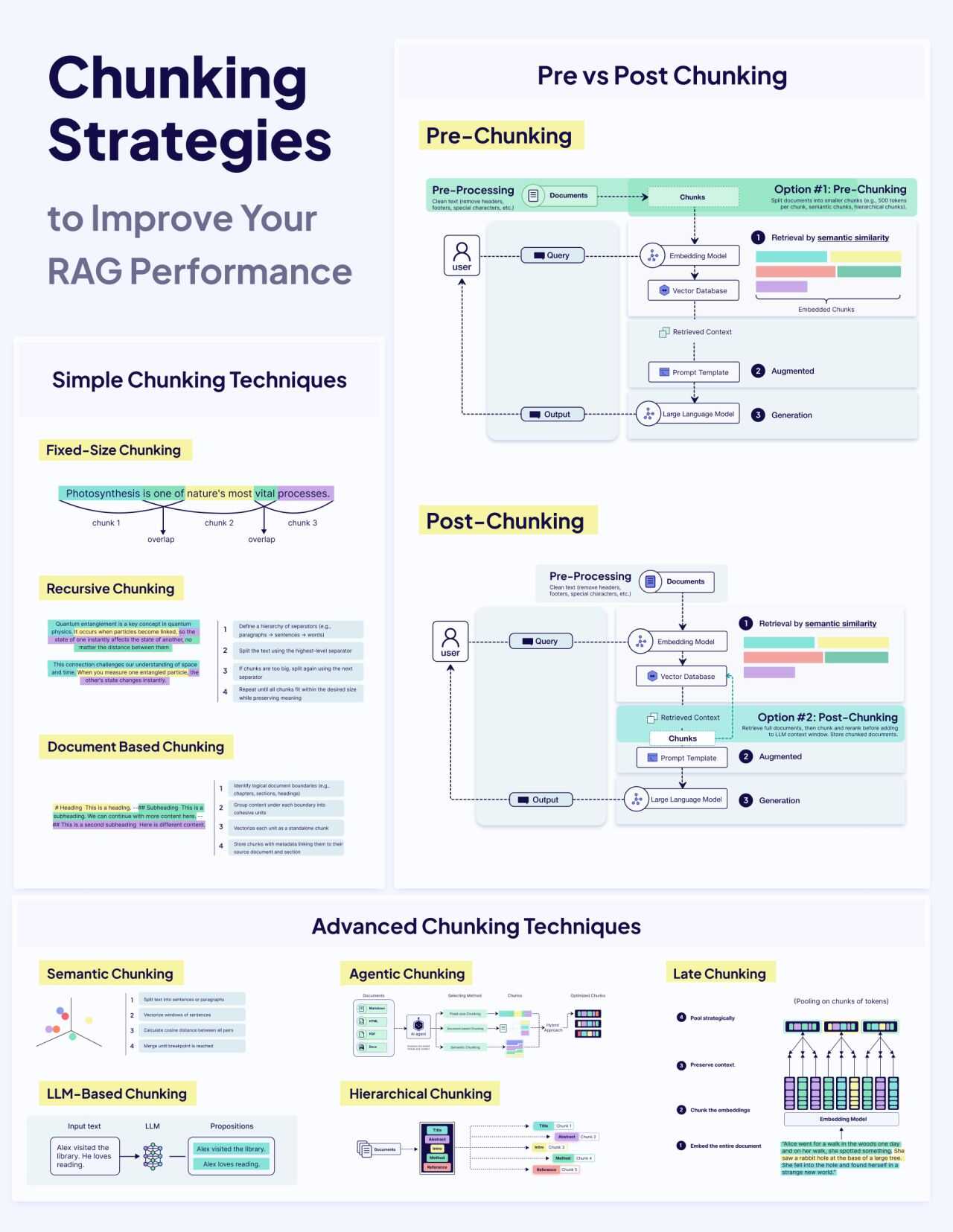

Chunking

- Fixed Size Chunking - The simplest approach - split text into chunks of consistent size (e.g., 100 words, 200 characters). Easy to implement but might break sentences awkwardly.

- Recursive Chunking - Hierarchically splits documents, preserving structure while creating manageable chunks. Great for maintaining context across different levels of detail.

- Document-based Chunking - Uses natural document markers like paragraphs, sections, or chapters as boundaries. Keeps related information together but can create wildly different chunk sizes.

- Semantic Chunking - Variable-size chunks based on meaning rather than arbitrary markers. More sophisticated but computationally intensive.

- Late Chunking - Embeds first, then chunks - preserving more contextual information in the vectors themselves.

Chunking Strategies to Improve Your RAG Performance | Weaviate

Reranking

- Rerankers and Two-Stage Retrieval | Pinecone

- What is a reranker and do I need one? - ZeroEntropy

- zcookbook/guides/rerank_llamaparsed_pdfs/rerank_llamaparsed_pdfs.ipynb at main · zeroentropy-ai/zcookbook · GitHub

Others

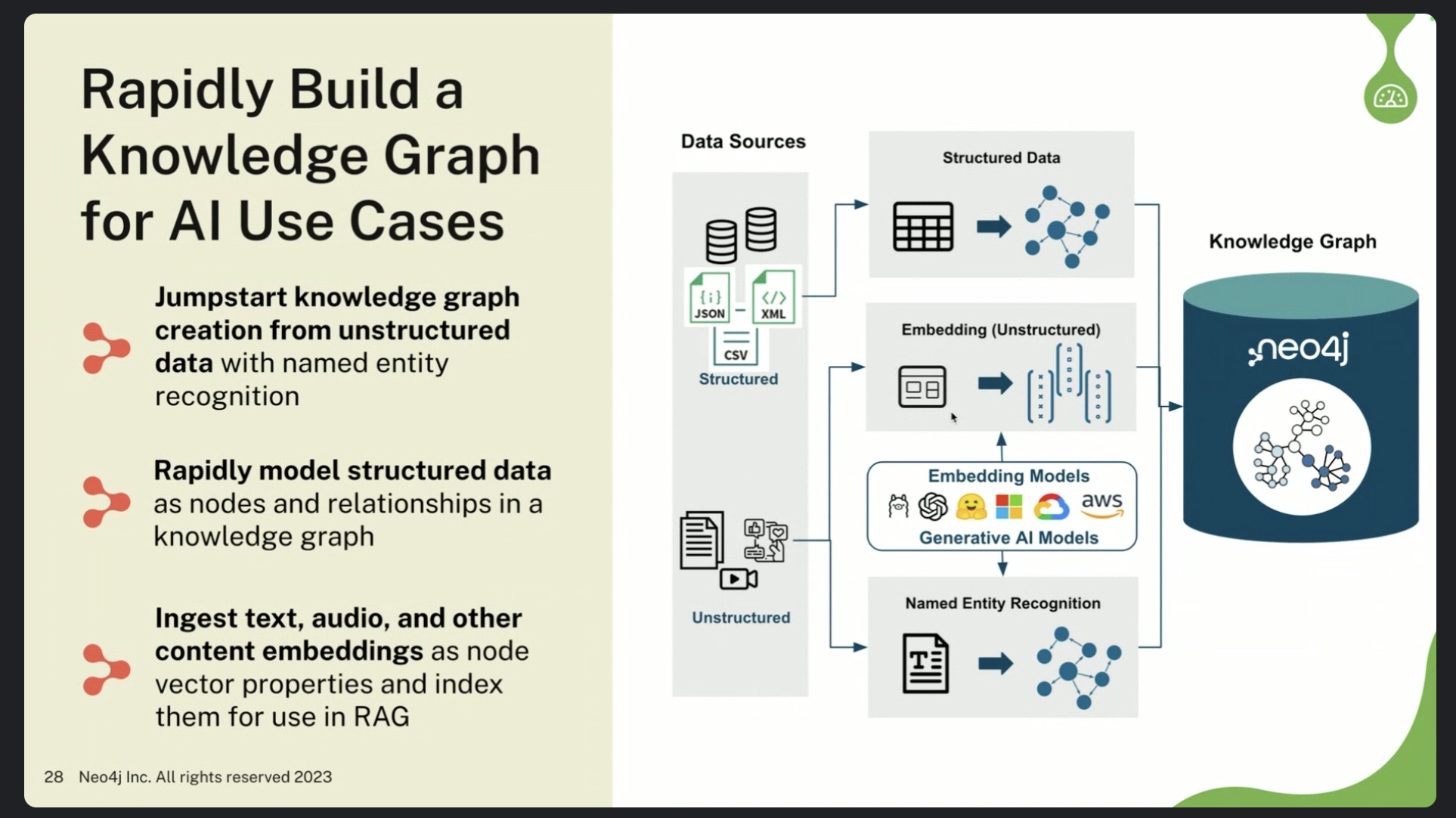

GraphRAG

- Enhancing the Accuracy of RAG Applications With Knowledge Graphs | by Tomaz Bratanic | Neo4j Developer Blog | Medium

- Enhance Your RAG Applications with Knowledge Graph RAG | Build Intelligent Apps With SingleStore

- GraphRAG: Unlocking LLM discovery on narrative private data - Microsoft Research

- GraphRAG: New tool for complex data discovery now on GitHub - Microsoft Research

- Neo4j | 🦜️🔗 LangChain

- langchain cypher qa chain

- YouTube

Cache-Augmented Generation (CAG)

RAG vs. CAG: Solving Knowledge Gaps in AI Models - YouTube

GitHub - hhhuang/CAG: Cache-Augmented Generation: A Simple, Efficient Alternative to RAG

Tools

- GitHub - bitswired/website-to-knowledge-base

- GitHub - weaviate/Verba: Retrieval Augmented Generation (RAG) chatbot powered by Weaviate

- GitHub - deepsense-ai/ragbits: Building blocks for rapid development of GenAI applications

- GitHub - vitali87/code-graph-rag: Search Monorepos and get relevant answers

- GitHub - microsoft/markitdown: Python tool for converting files and office documents to Markdown.

- GitHub - HKUDS/RAG-Anything: "RAG-Anything: All-in-One RAG System"

- GitHub - yichuan-w/LEANN: RAG on Everything with LEANN. Enjoy 97% storage savings while running a fast, accurate, and 100% private RAG application on your personal device.

- Our evaluation shows that LEANN reduces index size to under 5% of the original raw data, achieving up to 50 times smaller storage than standard indexes, while maintaining 90% top-3 recall in under 2 seconds on real-world question answering benchmarks.

NoCode Tools

- RAGFlow - RAGFlow is a RAG engine for deep document understanding! It lets you build enterprise-grade RAG workflows on complex docs with well-founded citations. Supports multimodal data understanding, web search, deep research, etc. 100% open-source with 59k+ stars!

- xpander - xpander is a framework-agnostic backend for agents that manages memory, tools, multi-user states, events, guardrails, etc. While it is not a core no-code tool, you can build, test, and deploy Agents by primarily using the UI. Compatible with LlamaIndex, CrewAI, etc.

- Transformer Lab - Transformer Lab is an app to experiment with LLMs: - Train, fine-tune, or chat.

- One-click LLM download (DeepSeek, Gemma, etc.)

- Drag-n-drop UI for RAG.

- Built-in logging, and more. A 100% open-source and local!

- GitHub - transformerlab/transformerlab-app: Open Source Application for Advanced LLM + Diffusion Engineering: interact, train, fine-tune, and evaluate large language models on your own computer.

- Llama Factory - LLaMA-Factory lets you train and fine-tune open-source LLMs and VLMs without writing any code. Supports 100+ models, multimodal fine-tuning, PPO, DPO, experiment tracking, and much more! 100% open-source with 50k stars!

- Langflow - Langflow is a drag-and-drop visual tool to build AI agents. It lets you build and deploy AI-powered agents and workflows. Supports all major LLMs, vector DBs, etc. 100% open-source with 82k+ stars!

- AutoAgent - AutoAgent is a zero-code framework that lets you build and deploy Agents using natural language. It comes with: - Universal LLM support

- Native self-managing Vector DB

- Function-calling and ReAct interaction modes.

- 100% open-source with 5k stars!

- GitHub - HKUDS/AutoAgent: "AutoAgent: Fully-Automated and Zero-Code LLM Agent Framework"

- GitHub - truefoundry/cognita: RAG (Retrieval Augmented Generation) Framework for building modular, open source applications for production by TrueFoundry

Links

- What is RAG (Retrieval-Augmented Generation)?

- RAG Best Practices: Enhancing Large Language Models with Retrieval-Augmented Generation | by Juan C Olamendy | Medium

- A Gentle Introduction to Retrieval Augmented Generation (RAG)

- REALM: Integrating Retrieval into Language Representation Models

- Learn RAG Fundamentals and Advanced Techniques

- Using ChatGPT to Search Enterprise Data with Pamela Fox - YouTube

- What is retrieval-augmented generation? | IBM Research Blog

- What is Retrieval-Augmented Generation (RAG)? - YouTube

- Vector Search RAG Tutorial - Combine Your Data with LLMs with Advanced Search - YouTube

- RAG - Retrieval-Augmented Generation - Full Guide - Build a RAG System to Chat with Your Documents - YouTube

- GitHub - beaucarnes/vector-search-tutorial

- DSPy: Not Your Average Prompt Engineering

- langchain/cookbook/RAPTOR.ipynb at master · langchain-ai/langchain · GitHub

- Introduction to Retrieval Augmented Generation (RAG)

- A beginner's guide to building a Retrieval Augmented Generation (RAG) application from scratch

- Building RAG with Open-Source and Custom AI Models

- RAG - Retrieval Augmented Generation - YouTube

- How to Choose the Right Embedding Model for Your LLM Application | MongoDB

- Building Production RAG Over Complex Documents - YouTube

- Retrieval-Augmented Generation (RAG) Patterns and Best Practices - YouTube

- RAG (Retrieval Augmented Generation) - YouTube

- Exploring Hacker News by mapping and analyzing 40 million posts and comments for fun | Wilson Lin

- Mastering RAG Systems for LLMs

- Build a real-time RAG chatbot using Google Drive and Sharepoint

- Building a Knowledge base for custom LLMs using Langchain, Chroma, and GPT4All | by Anindyadeep | Medium

- Introduction to Retrieval Augmented Generation (RAG) | Weaviate

- Advanced RAG Techniques | Weaviate

- Multimodal Retrieval Augmented Generation(RAG) | Weaviate

- What is Agentic RAG | Weaviate

- RAG with BigQuery - YouTube

- RAG Crash Course - Daily Dose of Data Science

- Mastering RAG: How To Architect An Enterprise RAG System