Langchain

Welcome to LangChain - 🦜🔗 LangChain 0.0.180

- Building LLM applications for production

- Introduction to LangChain LLM: A Beginner’s Guide

- How to Build LLM Applications with LangChain | DataCamp

python -m pip install --upgrade langchain[llm]

pip install chromadb

pip install pypdf

pip install chainlit

chainlit hello

chainlit run document_qa.py

Langchain vs LlamaIndex

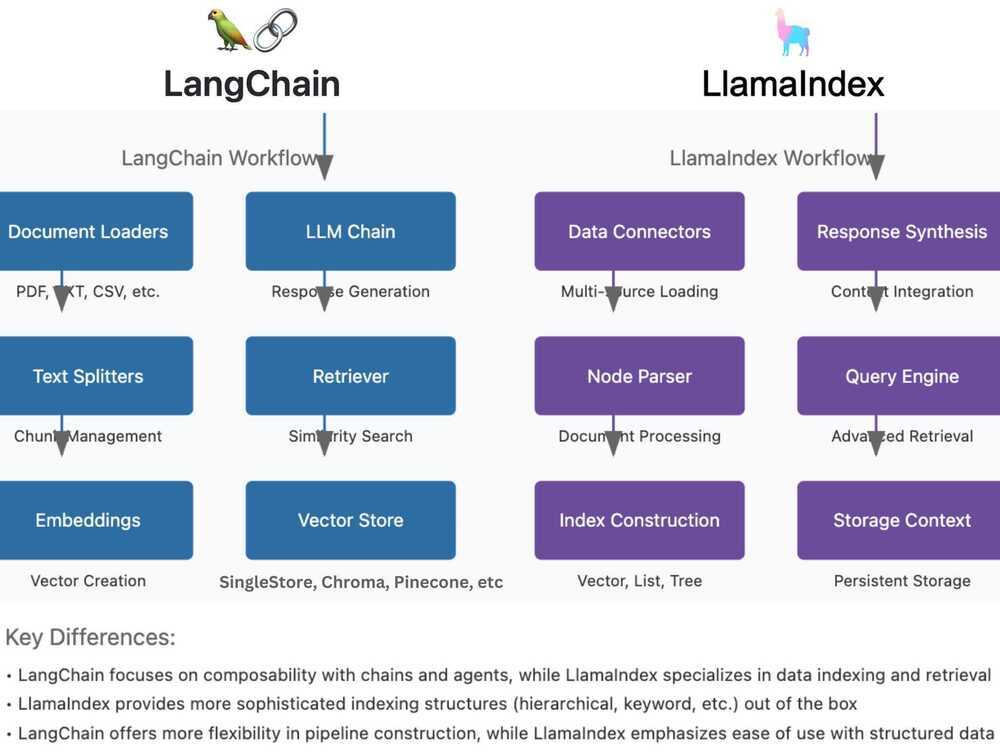

Both LangChain & LlamaIndex offer distinct approaches to implementing RAG workflows.

LangChain follows a modular pipeline starting with Document Loaders that handle various file formats, followed by Text Splitters for chunk management, and Embeddings for vector creation.

It then utilizes Vector Stores like SingleStore, FAISS or Chroma for storage, a Retriever for similarity search, and finally, an LLM Chain for response generation. This framework emphasizes composability and flexibility in pipeline construction.

On the other hand, LlamaIndex begins with Data Connectors for multi-source loading, employs a Node Parser for sophisticated document processing, and features diverse Index Construction options including vector, list, and tree structures.

It implements a Storage Context for persistent storage, an advanced Query Engine for retrieval, and Response Synthesis for context integration. LlamaIndex specializes in data indexing and retrieval, offering more sophisticated indexing structures out of the box, while maintaining a focus on ease of use with structured data.

The key distinction lies in their approaches: LangChain prioritizes customization and pipeline flexibility, while LlamaIndex emphasizes structured data handling and advanced indexing capabilities, making each framework suitable for different use cases in RAG implementations.

No matter what AI framework you pick, I always recommend using a robust data platform like SingleStore that supports not just vector storage but also hybrid search, low latency, fast data ingestion, all data types, AI frameworks integration, and much more.

A Beginner’s Guide to Building LLM-Powered Applications with LangChain! - DEV Community

Understanding LlamaIndex in 9 Minutes! - YouTube

LangGraph

- Build Agentic Workflows Using LangGraph! - YouTube

- LangChain vs. LangGraph: Which AI Framwork Is Right for You?

- AI Agent Workflows: A Complete Guide on Whether to Build With LangGraph or LangChain | by Sandi Besen | Towards Data Science

- GitHub - neo4j-labs/llm-graph-builder: Neo4j graph construction from unstructured data using LLMs

- Neo4j LLM Knowledge Graph Builder - Extract Nodes and Relationships from Unstructured Text

- GraphRAG Python Package: Accelerating GenAI With Knowledge Graphs

- User Guide: RAG — neo4j-graphrag-python documentation

- GenAI Stack

- Building LangGraph: Designing an Agent Runtime from first principles

Courses

LangSmith

- LangSmith

- What is LangSmith and why should I care as a developer? | by Logan Kilpatrick | Around the Prompt | Medium