Intro

LLM makes good programmers great, and not make bad programmers good

Moving from information to knowledge age

LMM - Large Multimodel Model

LLM

- A large language model (LLM) is a type of artificial intelligence program that can recognize and generate text, among other tasks.

- LLM are very large models that are pre-trained on vast amounts of data.

- Built on transformer architecture, it is a set of neural network that consist of an encoder and a decoder with self-attention capabilities.

- It can perform completely different tasks such as answering questions, summarizing documents, translating languages and completing sentences.

- Open AI's GPT-3 model has 175 billion parameters. Also it can take inputs up to 10K tokens in each prompt.

- In simpler terms, an LLM is a computer program that has been fed enough examples to be able to recognize and interpret human language or other types of complex data.

- Quality of the samples impacts how well LLMs will learn natural language, so an LLM's programmers may use a more curated data set.

Types

Base LLM

- Predicts next word, based on text training data

- Prompt - What is the capital of France?

- Ans - What is France's largest city?

- Ans - What is France's population?

Instruction Tuned LLM

- Tries to follow instructions

- Fine-tune on instructions and good attempts at following those instructions.

- RLHF: Reinforcement Learning with Human Feedback - Human Feedback in AI: The Essential Ingredient for Success | Label Studio Create a High-Quality Dataset for RLHF | Label Studio

- Helpful, Honest, Harmless

- Prompt - What is the capital of France?

- Ans - The capital of France is Paris.

GPT-3 / GPT-4

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model that uses deep learning to produce human-like text. Given an initial text as prompt, it will produce text that continues the prompt.

The architecture is a standard transformer network(with a few engineering tweaks) with the unprecedented size of 2048-token-long context and 175 billion parameters(requiring 800 GB of storage). The training method is "generative pretraining", meaning that it is trained to predict what the next token is. The model demonstrated strong few-shot learning on many text-based tasks.

Past Present & Future

- "The Past, Present, and Future of GenAI" - Yariv Adan, Google - KEYNOTE at PMF23 - YouTube

- Generative AI: Past, Present, and Future – A Practitioner's Perspective | PPT

- The AI Revolution Is Underhyped | Eric Schmidt | TED - YouTube

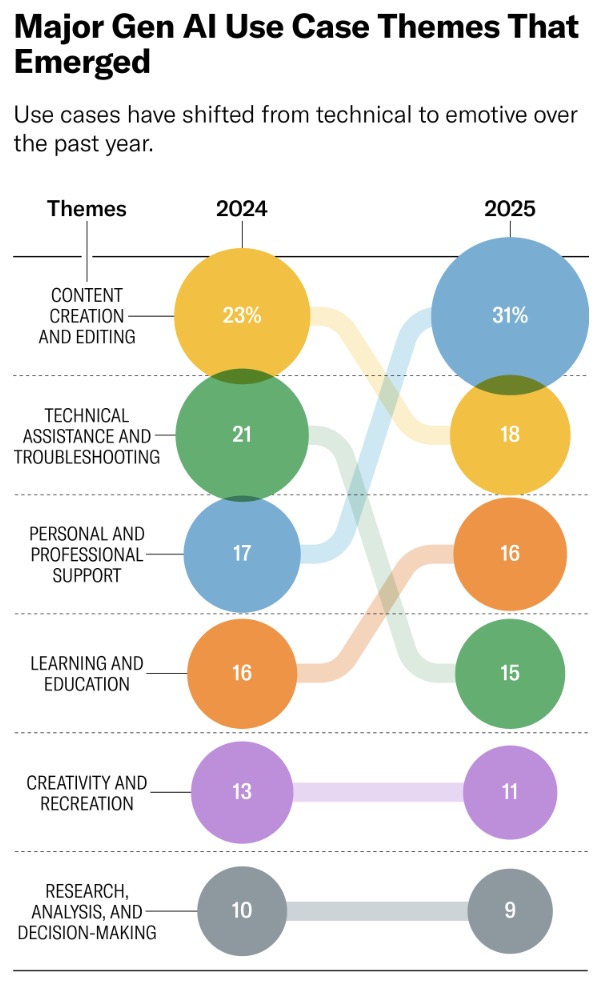

- How People Are Really Using Gen AI in 2025

Misconceptions

- LLMs Actually Understand Language Like Humans Do

- More Parameters Always Mean Better Performance

- LLMs Are Just Autocomplete on Steroids

- LLMs Are Just Autocomplete on Steroids

- Fine-Tuning Always Makes Models Better

- LLMs Are Deterministic: Same Input, Same Output

- Bigger Context Windows Are Always Better

- LLMs Can Replace Traditional Machine Learning for All Language Tasks

- Prompt Engineering Is Just Trial and Error

- LLMs Will Soon Replace All Software Developers

10 Common Misconceptions About Large Language Models - MachineLearningMastery.com

Datasets

MMLU Dataset | Papers With Code - Massive Multitask Language Understanding

Links

- How to create a private ChatGPT with your own data | by Mick Vleeshouwer | Mar, 2023 | Medium

- I Built a Hedge Fund Run by AI Agents - YouTube

- State of GPT | BRK216HFS - YouTube

- EMERGENCY EPISODE: Ex-Google Officer Finally Speaks Out On The Dangers Of AI! - Mo Gawdat | E252 - YouTube

- WARNING: ChatGPT Could Be The Start Of The End! Sam Harris - YouTube

- Getting Started with LLMs: A Quick Guide to Resources and Opportunities

- DoctorGPT: Offline & Passes Medical Exams! - YouTube

- The AI-Powered Tools Supercharging Your Imagination | Bilawal Sidhu | TED - YouTube

- The True Cost of Compute - YouTube

- Welcome to State of AI Report 2023

- State of AI Report 2023 - ONLINE - Google Slides

- [#29] AI Evolution: From AlexNet to Generative AI - Redefining the Paradigm of Software Development

- Google "We Have No Moat, And Neither Does OpenAI"

- AGI-Proof Jobs: Navigating the Impending Obsolescence of Human Labor in the Age of AGI - YouTube

- A DeepMind AI rivals the world's smartest high schoolers at geometry

- New Theory Suggests Chatbots Can Understand Text | Quanta Magazine

- The case for-and against-rapid AI-driven growth

- What I learned from looking at 900 most popular open source AI tools

- Exploring GaiaNet: The Future of Decentralized AI | The Perfect Blend of Web 3.0 and AI - YouTube

- Generative AI | PPT

- Foundational Models and Compute Trends - by Bugra Akyildiz

- What if LLM is the ultimate data janitor

- How to build an infrastructure from scratch to train a 70B Model?

- On the Factory Floor: ML Engineering for Industrial-Scale Ads Recommendation Models from Google

- Financial Statement Analysis with Large Language Models

- Scaling Meta’s Infra with GenAI: Journey to faster and smarter Incident Response - YouTube

- AWS re:Invent 2023 - Navigating the future of AI: Deploying generative models on Amazon EKS (CON312) - YouTube

- GenAI Training In Production: Software, Hardware & Network Considerations - YouTube

- How I Use "AI"

- How might LLMs store facts | Chapter 7, Deep Learning - YouTube

- Introduction to Generative AI - YouTube

- Introduction to Large Language Models - YouTube

- Welcome to State of AI Report 2024

- How Much Trust Do You Have with LLM-Based Solutions? • Matthew Salmon • GOTO 2024 - YouTube

- Building LLMs from the Ground Up: A 3-hour Coding Workshop - YouTube

- 3Blue1Brown - Large Language Models explained briefly

- Generative AI Fine Tuning LLM Models Crash Course - YouTube

- A Visual Guide to LLM Agents - by Maarten Grootendorst

- [2205.11916] Large Language Models are Zero-Shot Reasoners

- BERT Demystified: Like I’m Explaining It to My Younger Self

- I got fooled by AI-for-science hype—here's what it taught me

- Why Finishing with AI Feels Harder Than Starting- My Confessions

- Artificial Intelligence (AI) : 20 Must Know Terminology (Basics to Advanced)

- I think this is one of the best moments to be an AI/ML Engineer! | Alex Razvant

- LLMs + Coding Agents = Security Nightmare

- Networked Saas is the new AI business model replacing per-seat pricing

- Showing Delivery Date at 10x Scale with 1/10th Latency | by Dhruvik Shah | Flipkart Tech Blog

- Language Models as Fact Checkers? | Research - AI at Meta

- How LLMs Handle Infinite Context With Finite Memory | Towards Data Science