Tools

Informatica PowerCenter / IICS

Informatica PowerCenter is a tool for extracting, transforming, and loading data from sources. It's used to create data warehouses for industries. Informatica PowerCenter can connect to:

- Enterprise database systems

- Mainframe systems

- Middleware

- Midrange systems

- Analytics tools like Tableau

- Cloud-based systems like Microsoft Azure and AWS

Informatica Intelligent Cloud Services (IICS) is a cloud-based platform for integrating and synchronizing data and applications. IICS offers similar functionality to PowerCenter, but it can be accessed via the internet. IICS allows users to run ETL (Extract, Transform and Load) codes in the cloud.

Some transformations in IICS include:

- Lookup Transformation

- Joiner Transformation

- Union Transformation

- Normalizer Transformation

- Hierarchy Parser Transformation

- Hierarchy Builder Transformation

- Transaction Control Transformation

- WebServices Transformation

Components

- Secure Agents

- Cloud Data Governance and Data Catalog (CDGC) – Predictive Data Intelligence | Informatica India

- Informatica IDMC - Intelligent Data Management Cloud

Links

- Master Data Management (MDM)

- How Informatica Cloud Data Governance and Catalog uses Amazon Neptune for knowledge graphs | AWS Database Blog

DVC

DVC stands for "data version control". This project invites data scientists and engineers to a Git-inspired world, where all workflow versions are tracked, along with all the data artifacts and models, as well as associated metrics.

Data Version Control or DVC is a command line tool and VS Code Extension to help you develop reproducible machine learning projects:

- Version your data and models. Store them in your cloud storage but keep their version info in your Git repo.

- Iterate fast with lightweight pipelines. When you make changes, only run the steps impacted by those changes.

- Track experiments in your local Git repo (no servers needed).

- Compare any data, code, parameters, model, or performance plots.

- Share experiments and automatically reproduce anyone's experiment.

GitHub - iterative/dvc: 🦉 ML Experiments Management with Git

Tracking ML Experiments With Data Version Control

DBT

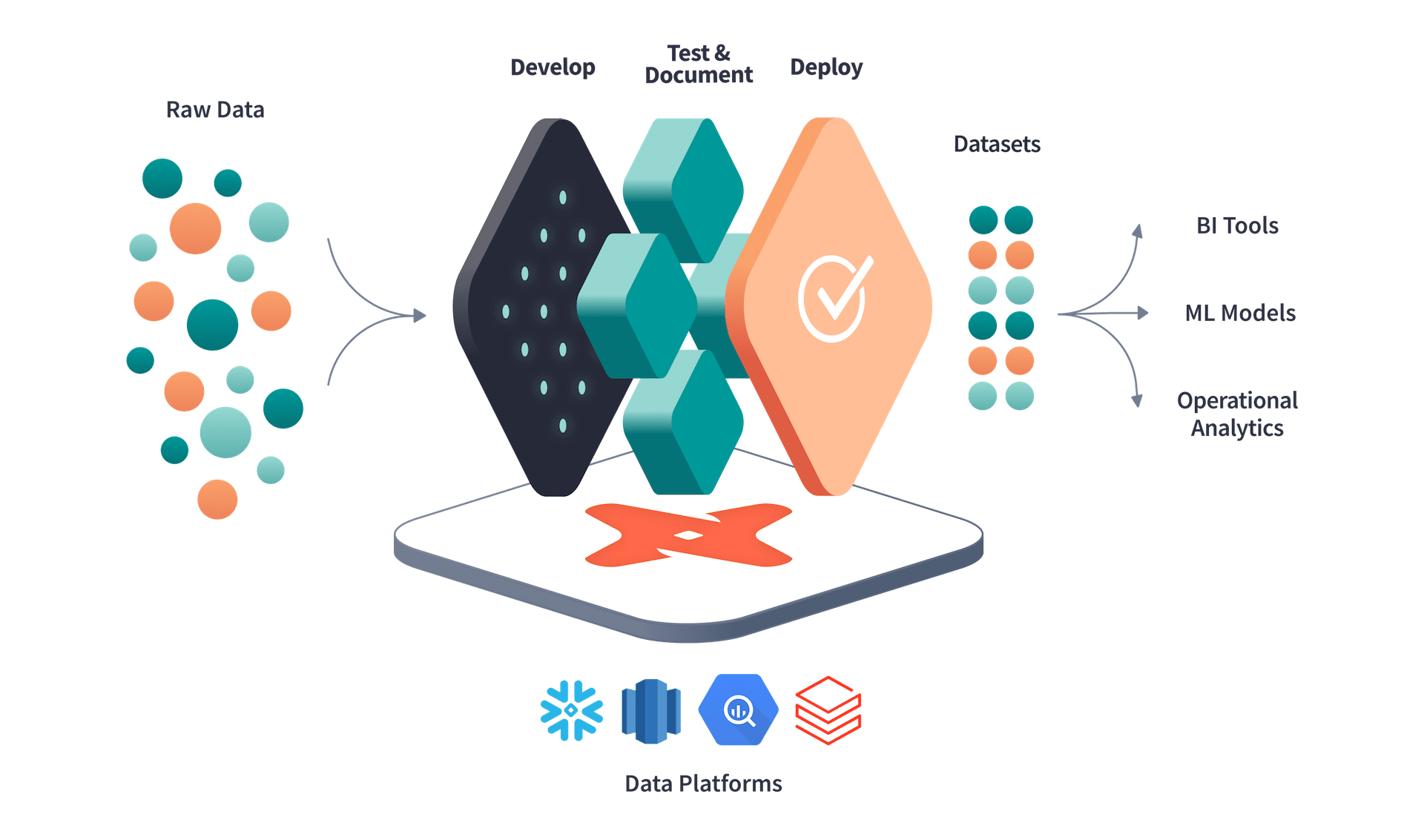

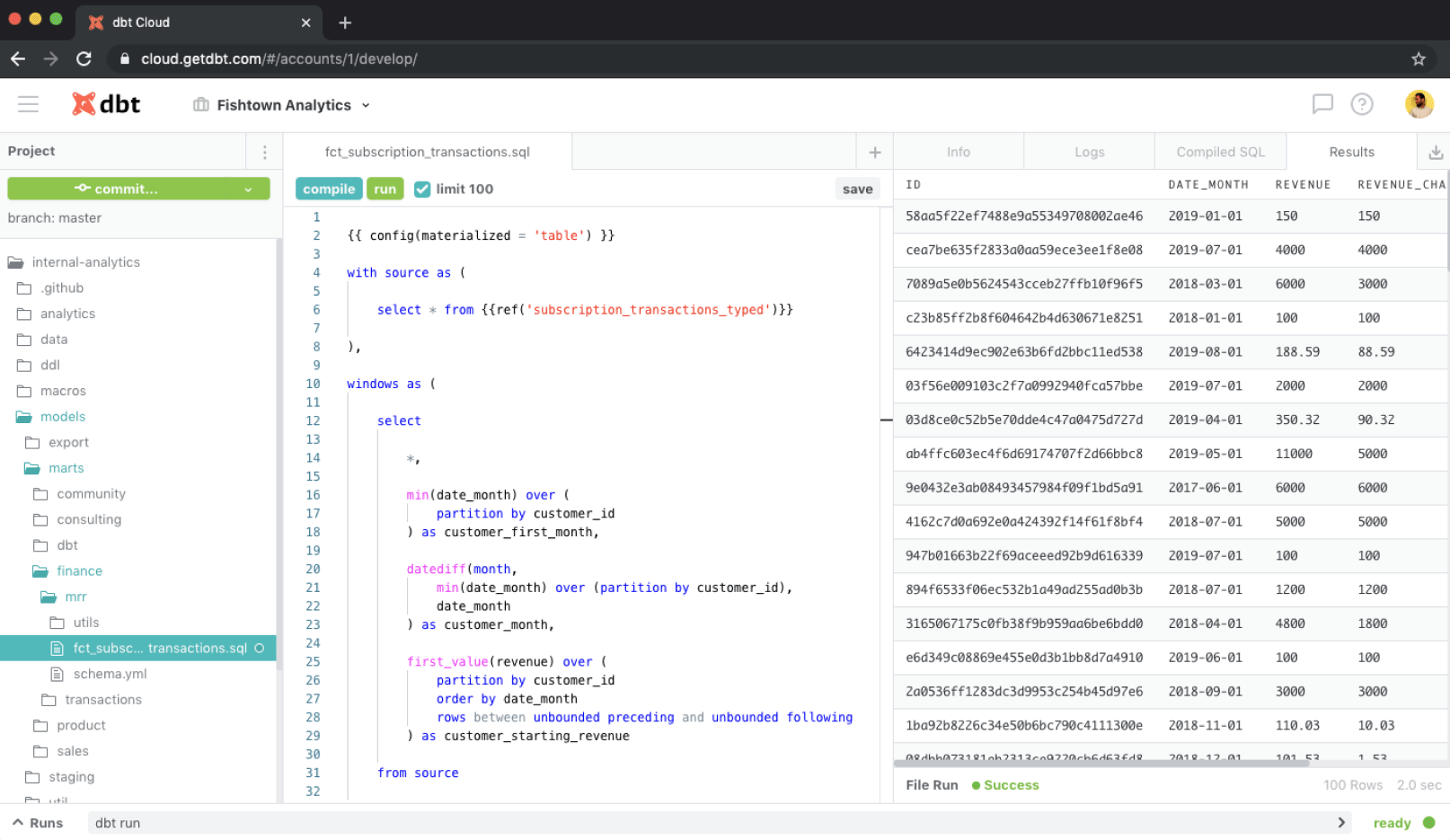

Analytics engineering is the data transformation work that happens between loading data into your warehouse and analyzing it. dbt allows anyone comfortable with SQL to own that workflow.

With dbt, data teams work directly within the warehouse to produce trusted datasets for reporting, ML modeling, and operational workflows.

dbt is a SQL-first transformation workflow that lets teams quickly and collaboratively deploy analytics code following software engineering best practices like modularity, portability, CI/CD, and documentation. Now anyone on the data team can safely contribute to production-grade data pipelines.

- https://www.getdbt.com

- What is dbt?

- What is Analytics Engineering?

- Getting Started with dbt (Data Build Tool): A Beginner’s Guide to Building Data Transformations | by Suffyan Asad | Medium

- GitHub - dbt-labs/dbt-core: dbt enables data analysts and engineers to transform their data using the same practices that software engineers use to build applications.

- Setting Up dbt and Connecting to Snowflake

- dbt vs Airflow vs Keboola

- dbt vs Airflow: Which data tool is best for your organization?

Airbyte

The leading data integration platform for ETL / ELT data pipelines from APIs, databases & files to data warehouses, data lakes & data lakehouses. Both self-hosted and Cloud-hosted.

Ultimate vision is to help you move data from any source to any destination. Airbyte already provides the largest catalog of 300+ connectors for APIs, databases, data warehouses, and data lakes.

Airbyte | Open-Source Data Integration Platform | ELT tool

Getting Started | Airbyte Documentation

CDC

To support CDC, Airbyte uses Debezium internally.

Airbyte's incremental Change Data Capture (CDC) replication

How useful is Airbytes in production pipelines? : r/dataengineering

Airbyte’s replication modes

- What is Airbyte’s ELT approach to data integration?

- Why is ELT preferred over ETL?

- What is a cursor?

- What is a primary key used for?

- What is the difference between full refresh replication and incremental sync replication?

- What does it mean to append data rather than overwrite it in the destination?

- How is incremental sync with deduped history different from incremental sync with append?

- What are the advantages of log-based change data capture (CDC) replication versus standard replication?

- Which replication mode should you choose?

| Full refresh replication | Incremental sync replication |

|---|---|

| The entire data set will be retrieved from the source and sent to the destination on each sync run. | Only records that have been inserted or updated in the source system since the previous sync run are sent to the destination. |

An overview of Airbyte’s replication modes | Airbyte