AWS Sagemaker

Amazon SageMaker Technical Deep Dive Series - YouTube

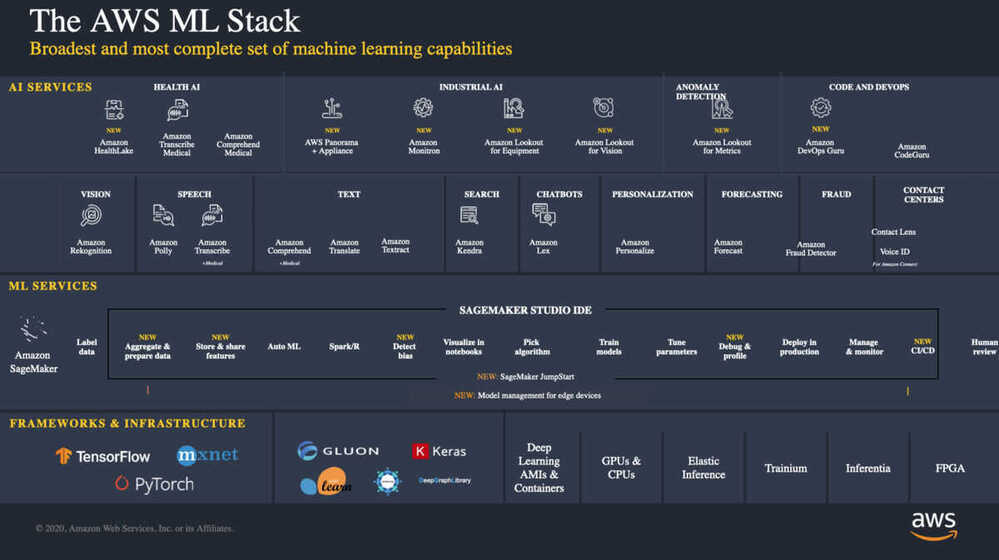

Amazon SageMaker is a fully managed service that provides every developer and data scientist with the ability to build, train, and deploy machine learning (ML) models quickly. SageMaker removes the heavy lifting from each step of the machine learning process to make it easier to develop high quality models.

Traditional ML development is a complex, expensive, iterative process made even harder because there are no integrated tools for the entire machine learning workflow. You need to stitch together tools and workflows, which is time-consuming and error-prone. SageMaker solves this challenge by providing all of the components used for machine learning in a single toolset so models get to production faster with much less effort and at lower cost.

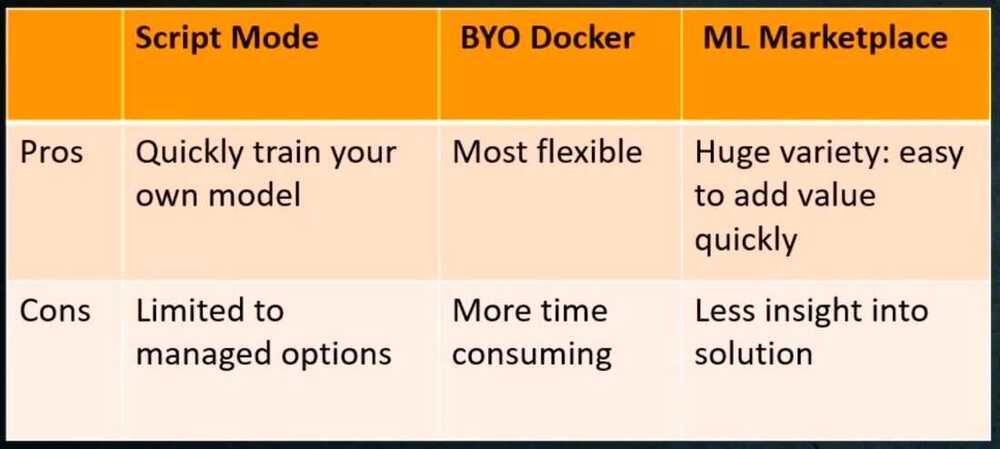

Many ways to train models on Sagemaker

- Built-in Algorithms

- Script Mode

- Docker container

- AWS ML Marketplace

- Notebook instance

Amazon SageMaker includes the following features

An integrated machine learning environment where you can build, train, deploy, and analyze your models all in the same application.

Versioning, artifact and lineage tracking, approval workflow, and cross account support for deployment of your machine learning models.

Create end-to-end ML solutions with CI/CD by using SageMaker projects.

SageMaker Model Building Pipelines

Create and manage machine learning pipelines integrated directly with SageMaker jobs.

Track the lineage of machine learning workflows.

Import, analyze, prepare, and featurize data in SageMaker Studio. You can integrate Data Wrangler into your machine learning workflows to simplify and streamline data pre-processing and feature engineering using little to no coding. You can also add your own Python scripts and transformations to customize your data prep workflow.

A centralized store for features and associated metadata so features can be easily discovered and reused. You can create two types of stores, an Online or Offline store. The Online Store can be used for low latency, real-time inference use cases and the Offline Store can be used for training and batch inference.

Learn about SageMaker features and capabilities through curated 1-click solutions, example notebooks, and pretrained models that you can deploy. You can also fine-tune the models and deploy them.

Improve your machine learning models by detecting potential bias and help explain the predictions that models make.

Optimize custom models for edge devices, create and manage fleets and run models with an efficient runtime.

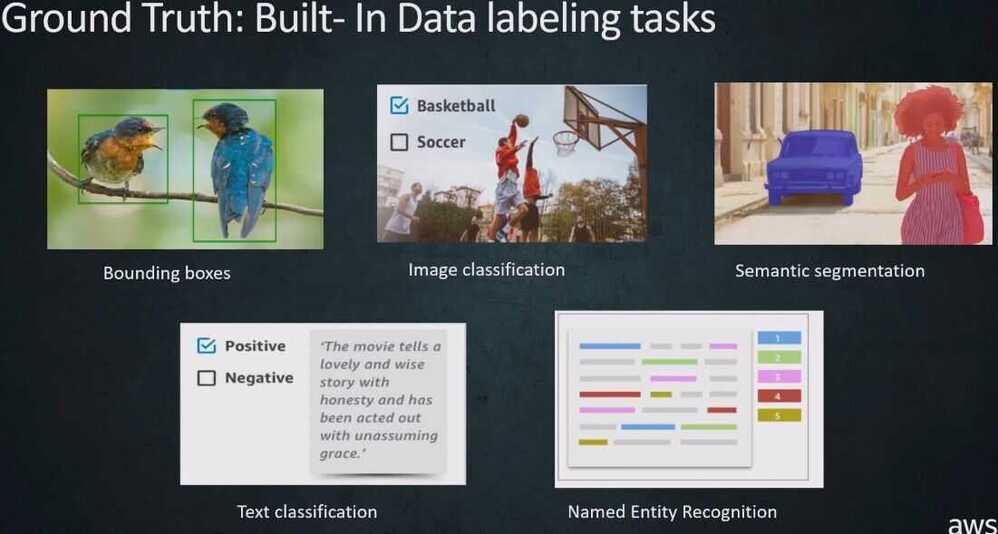

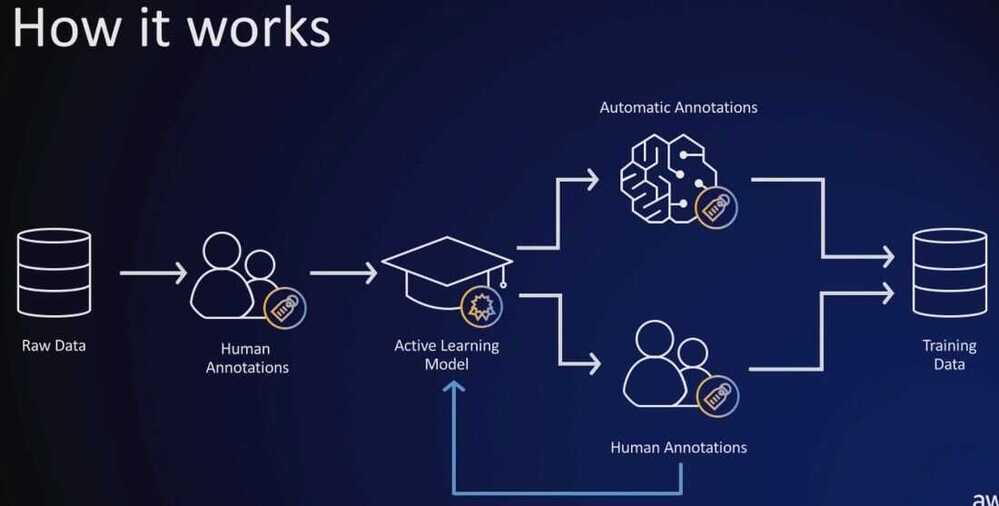

High-quality training datasets by using workers along with machine learning to create labeled datasets. Can reduce labeling cost by 70%

Workers

- Mechanical turk workers

- Private labeling workforce

- Third-party vendors

Label Consolidation

- Majority Voting

- Probabilities

Build the workflows required for human review of ML predictions. Amazon A2I brings human review to all developers, removing the undifferentiated heavy lifting associated with building human review systems or managing large numbers of human reviewers.

The next generation of SageMaker notebooks that include AWS Single Sign-On (AWS SSO) integration, fast start-up times, and single-click sharing.

Experiment management and tracking. You can use the tracked data to reconstruct an experiment, incrementally build on experiments conducted by peers, and trace model lineage for compliance and audit verifications.

Inspect training parameters and data throughout the training process. Automatically detect and alert users to commonly occurring errors such as parameter values getting too large or small.

Users without machine learning knowledge can quickly build classification and regression models.

- Good for - classification, regression, some missing values, PCA is ok

- Not good for - vision, text, sequence-based, data mostly missing

- You need feature interpretation upfront

- You need pretrained models

- Remember

- Try to include as much domain knowledge as you can in the features

- You might have to wait upfront, but it's saving you time in the end

- You will get all the code generated for you

Monitor and analyze models in production (endpoints) to detect data drift and deviations in model quality.

Train machine learning models once, then run anywhere in the cloud and at the edge.

Speed up the throughput and decrease the latency of getting real-time inferences.

Maximize the long-term reward that an agent receives as a result of its actions.

Analyze and preprocess data, tackle feature engineering, and evaluate models.

Preprocess datasets, run inference when you don't need a persistent endpoint, and associate input records with inferences to assist the interpretation of results.

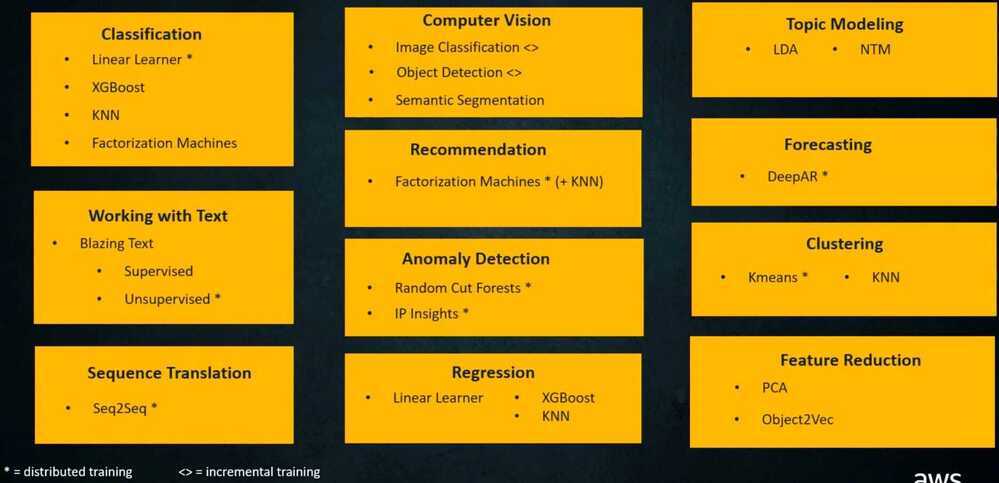

Built-in Algorithms

Instances

- t - tiny

- m - memory optimized

- c - compute optimized

- p - gpu

Best practices

- Pick the right size - 5GB default

- Think: store on EBS /home/ec2-user/SageMaker

- Add or create git repository

- Configure security settings

- Encryption

- Root volume access

- Internet access

- VPC Connection

- Use a lifecycle config

- Create, start notebook

- Install packages, copy data

- Run in background with '&'

- Attach a portion of a GPU for local inference

- Size, version, bandwidth

Sagemaker SDK and examples

- https://pypi.org/project/sagemaker

- https://sagemaker.readthedocs.io/en/stable/

- https://github.com/aws/sagemaker-python-sdk

- https://github.com/aws/amazon-sagemaker-examples

- https://github.com/aruncs2005/fraud-detection-workshop.git

Sagemaker Lifecycle configurations (Auto shutdown)

- https://aws.amazon.com/blogs/machine-learning/save-costs-by-automatically-shutting-down-idle-resources-within-amazon-sagemaker-studio

- https://github.com/aws-samples/sagemaker-studio-auto-shutdown-extension

- https://github.com/aws-samples/amazon-sagemaker-notebook-instance-lifecycle-config-samples

- https://github.com/aws-samples/sagemaker-studio-auto-shutdown-extension/tree/main/auto-installer

Managed Spot Training

- https://towardsdatascience.com/a-quick-guide-to-using-spot-instances-with-amazon-sagemaker-b9cfb3a44a68

- https://aws.amazon.com/blogs/aws/managed-spot-training-save-up-to-90-on-your-amazon-sagemaker-training-jobs

Others

- https://aws.amazon.com/blogs/machine-learning/understanding-amazon-sagemaker-notebook-instance-networking-configurations-and-advanced-routing-options

- AWS Innovate | Intro to Deep Learning: Building an Image Classifier on Amazon SageMaker - YouTube

- Introducing the next generation of Amazon SageMaker: The center for all your data, analytics, and AI | AWS News Blog

- Amazon SageMaker Unified Studio: Getting started with analytics - YouTube

- AWS re:Invent 2024-Next-generation Amazon SageMaker: The center for data, analytics & AI(ANT206-NEW) - YouTube

- Amazon Bedrock IDE demo in Amazon SageMaker Unified Studio | Amazon Web Services - YouTube

- End To End Machine Learning Project Implementation Using AWS Sagemaker - YouTube

- What Happened to Amazon SageMaker? Changes and New Services Explained in Plain English - YouTube

- Amazon SageMaker Unified Studio Integration with Amazon QuickSight - YouTube

- Streamlining Data & AI Workflows with Amazon SageMaker Unified Studio | AWS Events - YouTube

- Introduction to data lineage in Amazon SageMaker Unified Studio | Amazon Web Services - YouTube

- 🚀 Amazon SageMaker Unified Studio + Bedrock | Build AI Agents Faster! - YouTube

- Amazon SageMaker overview | Amazon Web Services - YouTube