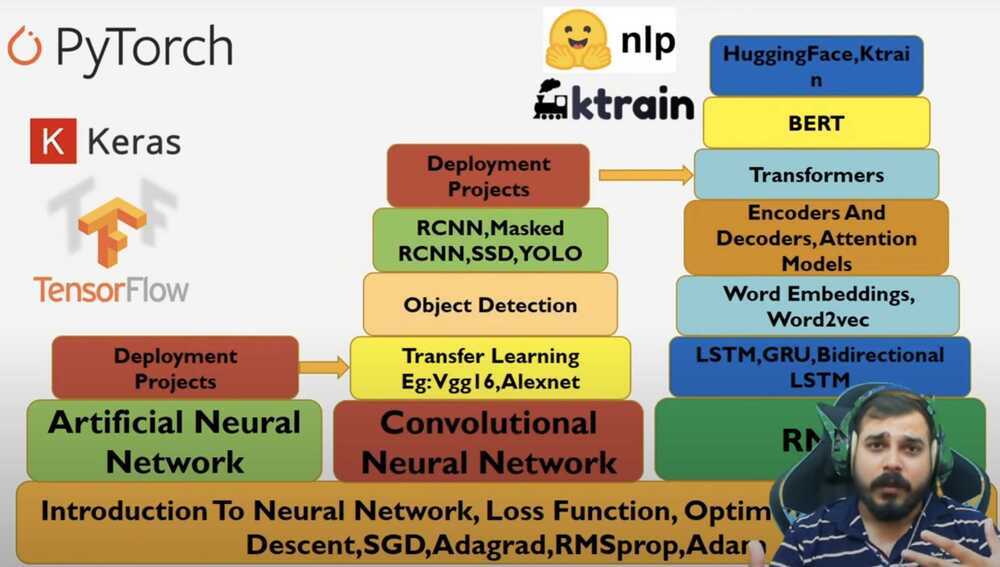

Roadmap

- Foundational - Introduction to Neural Network, Loss Function, Optimizers - Gradient Descent, SGD, Adagrad, RMSProp, Adam

- Everyone is using Adam optimizer, since it is able to change the momentum i.e. the learning rate as your training is going on

- Activation function - ReLU, Sigmoid, Tanh

- Geoffrey Hinton - inventor of backpropogation algorithm

- Inputs, weights, bias

Artificial Neural Network (ANN)

- Weight Initialization

- Hyper parameter tuning

- How to decide, how many number of hidden layers will be there.

- How to decide on number of neurons should I take in the hidden layer

- Keras Tuner

- Auto Keras

Convolutional Neural Network (CNN)

- Convolution

- Image + Video

- Filters, Strides, Layers

- Transfer Learning

Recurrent Neural Network (RNN)

- NLP

- Sequence to sequence data

- Sentence

- Sales Forecasting

- Neural language translation

- HuggingFace, Ktrain

Complete Road Map To Prepare For Deep Learning🔥🔥🔥🔥 - YouTube