AI

- Model Evaulation

- Data Science

- Big Data

- Data Visualization

- ML Fundamentals

- ML Algorithms

- Deep Learning

- LLM

- Computer Vision

- NLP

- Move37 - Reinforcement Learning

- Pandas

- Numpy

- Scikit-learn / Scipy

- Libraries

- Courses

- Others / Resources / Interview / Learning

- Hackathons

- Solutions

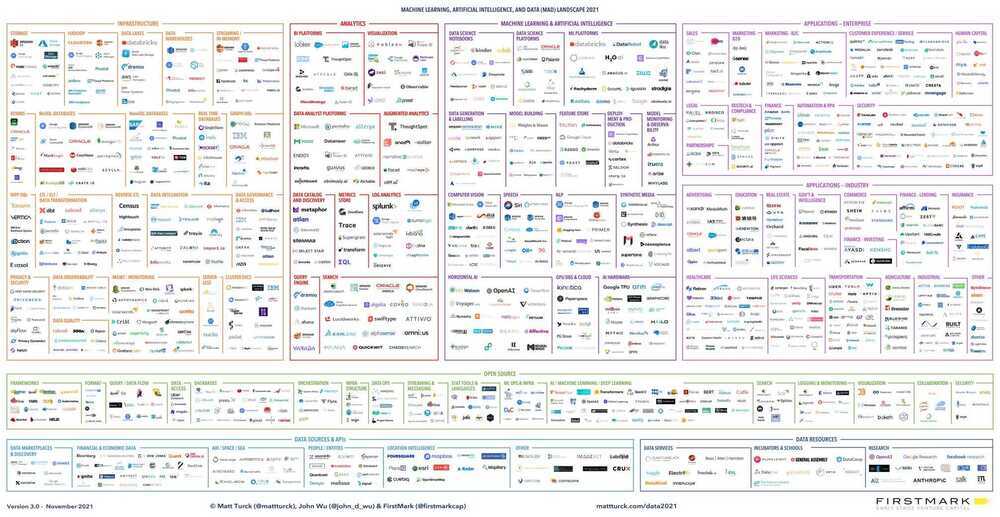

Data & AI Landscape

Interactive - FirstMark | 2024 MAD (ML/AI/Data) Landscape

Full Steam Ahead: The 2024 MAD (Machine Learning, AI & Data) Landscape – Matt Turck

AGI (Artificial General Intelligence) / Sentient / Intelligence Explosion / Technological singularity / Superintelligence

- Current - ANI - Artificial Narrow Intelligence

- ASI — Artificial Super Intelligence

AGI will be intellectually, morally, ethically and creatively superior to humans in every conceivable way

An "intelligence explosion" refers to a hypothetical scenario where a self-improving AI system could rapidly and exponentially increase its intelligence, surpassing human intelligence. This concept, also known as the "singularity," suggests that the initial superintelligent machine could design even better machines, leading to a cascade of intelligence growth.

- Introduction - SITUATIONAL AWARENESS: The Decade Ahead

- Technological singularity - Wikipedia

- Ben Goertzel: Singularity, Sparks of AGI in GPT - YouTube

- Ben Goertzel - Open Ended vs Closed Minded Conceptions of Superintelligence - YouTube

- Christopher Hitchens on Fear and A.I. - YouTube

- Google's DeepMind Co-founder: AI Is Becoming More Dangerous And Threatening! - Mustafa Suleyman - YouTube

- Artificial consciousness - Wikipedia

- A.I. ‐ Humanity's Final Invention? - YouTube

- Once upon a time…AI created a religion about a Goat | by AJ | Medium

- DeepMind CEO Demis Hassabis + Google Co-Founder Sergey Brin: AGI by 2030? - YouTube

- Why Can’t We Tame AI? - Cal Newport

- Why the AI Revolution Has a Fatal Flaw - YouTube

- AI economic paradox

- AI isn't replacing radiologists - by Deena Mousa

- Leopold Aschenbrenner — 2027 AGI, China/US super-intelligence race, & the return of history - YouTube

- Will AI outsmart human intelligence? - with 'Godfather of AI' Geoffrey H...

- If AI is really changing everything… where’s the evidence? - YouTube

- Agentic Misalignment: How LLMs could be insider threats \ Anthropic

- The Thinking Game | Full documentary | Tribeca Film Festival official selection - YouTube

Links

- AI Index Report 2024 – Artificial Intelligence Index

- When Machines Think Ahead: The Rise of Strategic AI | by Hans Christian Ekne | Nov, 2024 | Towards Data Science

- AI Slop Is Killing Our Channel - YouTube

- Welcome to State of AI Report 2025

- The Limits of AI: Generative AI, NLP, AGI, & What’s Next? - YouTube

- Is Sora the Beginning of the End for OpenAI? - Cal Newport